When you bring AI into your media workflows, it’s not just about cool features or faster editing. It’s about trust, control, and responsibility. For enterprise teams, that means asking the hard hitting questions: How safe is your data, really? Who controls access? What happens if something goes wrong?

Here’s how Descript thinks about those questions and how we’ve built technical, compliance, and security safeguards with real teams in mind.

The stakes are real

If you’re managing content at scale, a security breach or compliance violation isn’t just an IT problem. It’s a reputational risk, a legal liability, and a signal to your audience (and your board) that things weren’t taken seriously.

When AI is involved, the stakes rise. Transcripts, source audio/video, even custom voice models are all sensitive assets. If handled poorly, they can be misused, exposed, or manipulated.

So before you adopt an AI-powered editing tool, here’s what you should demand at a bare minimum—and what Descript brings to the table.

Key technical & security considerations

Here are the areas you should scrutinize:

1. Encryption & data protection

Your data must be encrypted at rest and in transit. That means all stored files (video, audio, transcripts) are protected, and anything moving between your system and the editing service goes over secure channels.

What we do:

At Descript, we use AES-256 encryption for data at rest, and HTTPS / TLS 1.2 for data in flight.

2. Secure infrastructure & access controls

If your editing tool’s infrastructure is lax, you’re exposing your content to risk.

- Secrets and credentials should be stored and encrypted in managed environments (e.g. in encrypted storage provided by cloud providers).

- Access to sensitive systems must require multi-factor authentication (MFA).

- Physical security matters too: Data centers and offices should have controlled access.

What we do:

Descript uses encrypted storage systems via Google and Amazon, and enforces MFA for its internal systems. We also maintain a physical security plan with controlled office access.

3. AI feature governance & consent

Allowing AI to manipulate media (voices, transcripts, avatars) introduces new risks: impersonation, deepfakes, and misuse of voice data, for starters.

Best practices include:

- Opt-in control: Users should explicitly choose to use AI features.

- Voice consent: Only use recording from speakers who have given explicit consent.

- Transparency: Let users know what data is used, and how it’s handled.

- Monitoring: Audit AI features via tests, scans, and reviews.

What we do:

Descript’s AI features are all opt-in. Users control what data they provide. Descript doesn’t collect media from users’ systems automatically. For custom voices, only consenting recordings can be used. We also run annual security reviews and vulnerability scans on AI behavior.

4. Vulnerability management & responsible disclosure

Even the best systems will have bugs. What matters is how quickly and transparently they’re fixed.

Which is why you need:

- Continuous vulnerability scanning of the code and dependencies

- Prioritized remediation policies (critical/higher severity first)

- Formal processes for patching and deployment

- A clear path for security researchers to report issues

What we do:

Descript runs continuous vulnerability scans of our systems. We categorize issues by severity and resolve them under timelines defined by internal policies.

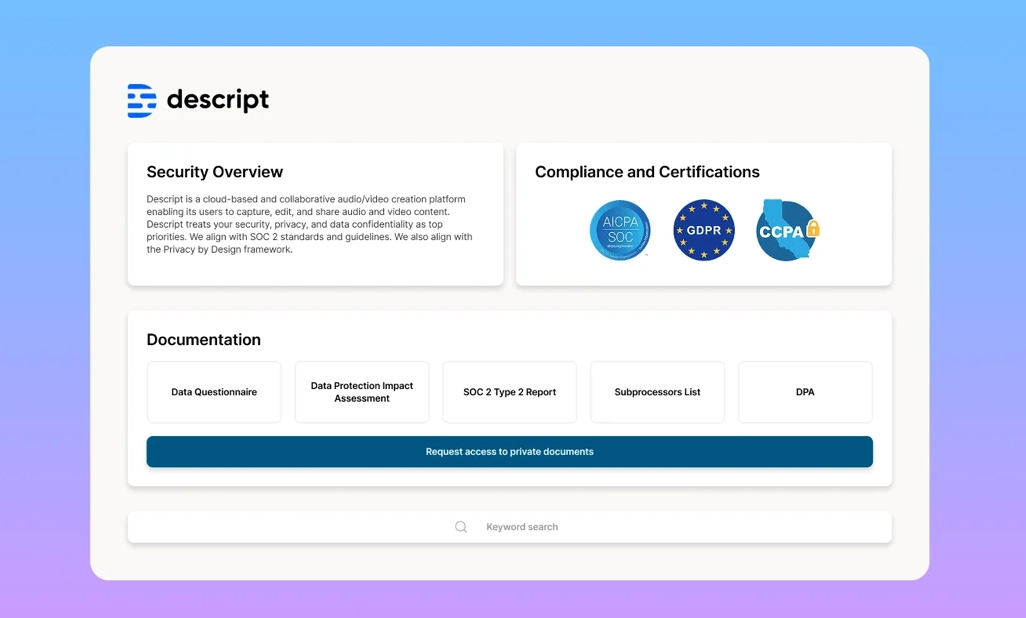

5. Compliance & audit readiness

If your organization is under regulations (GDPR, CCPA, SOC 2, etc.), your editing tools must align.

Look for:

- Certifications/audits: Does the vendor hold recognized compliance badges (like SOC 2 Type II)?

- Privacy frameworks: Are data practices aligned with Privacy by Design, GDPR, CCPA, etc?

- Data subject rights: Can users access, correct, delete, or port their data?

- Third-party agreements: Are there Data Processing Agreements (DPAs) with vendors?

What we do:

Descript aligns with SOC 2 standards, GDPR, CCPA, and follows a Privacy by Design approach. Users have rights to access, correct, delete, and port their data. We limit third-party sharing and require strict data protection commitments.

6. Data handling & minimization

Just because you can collect data doesn’t mean you should. For sensitive media and transcripts, companies should:

- Use the minimum amount of data required

- Delete data you they longer need

- Isolate or anonymize where possible

What we do:

In Descript:

- Media, transcripts, and project metadata are stored to enable collaboration, versioning, and access.

- Transcription sharing with Descript (for training or improvement) is opt-in and disabled by default.

- Usage analytics exclude private project data.

- If you delete your project or account, associated data is permanently deleted within 30 days.

7. Integration risk & supply chain control

Many tools rely on third-party services (storage, identity, email, avatars). Each integration is a potential weak link.

You should ask:

- What third parties are involved?

- What data is shared, and how is it protected?

- What contracts or assurances ensure compliance?

What we do:

Descript integrates with cloud storage providers (Google Cloud, AWS), transcription systems (OpenAI Whisper, Rev), identity providers (Stytch), support tools (Zendesk), payment providers (Stripe), and more. All integrations are governed by specific data agreements and limited data sharing.

Putting this into practice

It’s easy to be seduced by AI feature lists. But if the underlying security and compliance aren’t solid, those features can become liabilities.

Before you commit to an editing platform, map your risk surface:

- Which AI features will your team use?

- What data types will be handled (voices, transcripts, source video)?

- What legal or regulatory constraints must you meet?

- How do your identity, access, and audit policies apply?

Ask the vendor to show their trust or audit reports. Demand transparency. Push for proof of security, not just promises.

Why Descript isn’t just another “AI tool”

We built Descript with these security, compliance, and technical guardrails in mind, not as afterthoughts:

- All AI features are opt-in and under user control.

- Encryption, MFA, secure infrastructure, and physical security are baked in.

- Continuous vulnerability scanning and risk response are standard.

- Compliance with SOC 2, GDPR, CCPA, and Privacy by Design are integral.

- Users keep control over their media, transcripts, and whether Descript can use them for training.

- Integration with external services is carefully governed.

We don’t see security as a checkbox. It’s fundamental. Because when teams trust their tools, they can focus on creating great content, not worrying whether something might go wrong behind the scenes.